Web Speech API Demonstration

Click on the microphone icon and begin speaking for as long as you like.

No speech was detected. You may need to adjust your microphone settings .

No microphone was found. Ensure that a microphone is installed and that microphone settings are configured correctly.

Click the "Allow" button above to enable your microphone.

Permission to use microphone was denied.

Permission to use microphone is blocked. To change, go to chrome://settings/contentExceptions#media-stream

Web Speech API is not supported by this browser. Upgrade to Chrome version 25 or later.

Press Control-C to copy text.

(Command-C on Mac.)

Text sent to default email application.

(See chrome://settings/handlers to change.)

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Text to speech REST API

- 4 contributors

The Speech service allows you to convert text into synthesized speech and get a list of supported voices for a region by using a REST API. In this article, you learn about authorization options, query options, how to structure a request, and how to interpret a response.

Use cases for the text to speech REST API are limited. Use it only in cases where you can't use the Speech SDK . For example, with the Speech SDK you can subscribe to events for more insights about the text to speech processing and results.

The text to speech REST API supports neural text to speech voices in many locales. Each available endpoint is associated with a region. A Speech resource key for the endpoint or region that you plan to use is required. Here are links to more information:

- For a complete list of voices, see Language and voice support for the Speech service .

- For information about regional availability, see Speech service supported regions .

- For Azure Government and Microsoft Azure operated by 21Vianet endpoints, see this article about sovereign clouds .

Costs vary for prebuilt neural voices (called Neural on the pricing page) and custom neural voices (called Custom Neural on the pricing page). For more information, see Speech service pricing .

Before you use the text to speech REST API, understand that you need to complete a token exchange as part of authentication to access the service. For more information, see Authentication .

Get a list of voices

You can use the tts.speech.microsoft.com/cognitiveservices/voices/list endpoint to get a full list of voices for a specific region or endpoint. Prefix the voices list endpoint with a region to get a list of voices for that region. For example, to get a list of voices for the westus region, use the https://westus.tts.speech.microsoft.com/cognitiveservices/voices/list endpoint. For a list of all supported regions, see the regions documentation.

Voices and styles in preview are only available in three service regions: East US, West Europe, and Southeast Asia.

Request headers

This table lists required and optional headers for text to speech requests:

Request body

A body isn't required for GET requests to this endpoint.

Sample request

This request requires only an authorization header:

Here's an example curl command:

Sample response

You should receive a response with a JSON body that includes all supported locales, voices, gender, styles, and other details. The WordsPerMinute property for each voice can be used to estimate the length of the output speech. This JSON example shows partial results to illustrate the structure of a response:

HTTP status codes

The HTTP status code for each response indicates success or common errors.

Convert text to speech

The cognitiveservices/v1 endpoint allows you to convert text to speech by using Speech Synthesis Markup Language (SSML) .

Regions and endpoints

These regions are supported for text to speech through the REST API. Be sure to select the endpoint that matches your Speech resource region.

Prebuilt neural voices

Use this table to determine availability of neural voices by region or endpoint:

Voices in preview are available in only these three regions: East US, West Europe, and Southeast Asia.

Custom neural voices

If you've created a custom neural voice font, use the endpoint that you've created. You can also use the following endpoints. Replace {deploymentId} with the deployment ID for your neural voice model.

The preceding regions are available for neural voice model hosting and real-time synthesis. Custom neural voice training is only available in some regions. But users can easily copy a neural voice model from these regions to other regions in the preceding list.

Long Audio API

The Long Audio API is available in multiple regions with unique endpoints:

If you're using a custom neural voice, the body of a request can be sent as plain text (ASCII or UTF-8). Otherwise, the body of each POST request is sent as SSML . SSML allows you to choose the voice and language of the synthesized speech that the text to speech feature returns. For a complete list of supported voices, see Language and voice support for the Speech service .

This HTTP request uses SSML to specify the voice and language. If the body length is long, and the resulting audio exceeds 10 minutes, it's truncated to 10 minutes. In other words, the audio length can't exceed 10 minutes.

* For the Content-Length, you should use your own content length. In most cases, this value is calculated automatically.

The HTTP status code for each response indicates success or common errors:

If the HTTP status is 200 OK , the body of the response contains an audio file in the requested format. This file can be played as it's transferred, saved to a buffer, or saved to a file.

Audio outputs

The supported streaming and nonstreaming audio formats are sent in each request as the X-Microsoft-OutputFormat header. Each format incorporates a bit rate and encoding type. The Speech service supports 48-kHz, 24-kHz, 16-kHz, and 8-kHz audio outputs. Each prebuilt neural voice model is available at 24kHz and high-fidelity 48kHz.

- NonStreaming

If you select 48kHz output format, the high-fidelity voice model with 48kHz will be invoked accordingly. The sample rates other than 24kHz and 48kHz can be obtained through upsampling or downsampling when synthesizing, for example, 44.1kHz is downsampled from 48kHz.

If your selected voice and output format have different bit rates, the audio is resampled as necessary. You can decode the ogg-24khz-16bit-mono-opus format by using the Opus codec .

Authentication

Each request requires an authorization header. This table illustrates which headers are supported for each feature:

When you're using the Ocp-Apim-Subscription-Key header, only your resource key must be provided. For example:

When you're using the Authorization: Bearer header, you need to make a request to the issueToken endpoint. In this request, you exchange your resource key for an access token that's valid for 10 minutes.

Another option is to use Microsoft Entra authentication that also uses the Authorization: Bearer header, but with a token issued via Microsoft Entra ID. See Use Microsoft Entra authentication .

How to get an access token

To get an access token, you need to make a request to the issueToken endpoint by using Ocp-Apim-Subscription-Key and your resource key.

The issueToken endpoint has this format:

Replace <REGION_IDENTIFIER> with the identifier that matches the region of your subscription.

Use the following samples to create your access token request.

HTTP sample

This example is a simple HTTP request to get a token. Replace YOUR_SUBSCRIPTION_KEY with your resource key for the Speech service. If your subscription isn't in the West US region, replace the Host header with your region's host name.

The body of the response contains the access token in JSON Web Token (JWT) format.

PowerShell sample

This example is a simple PowerShell script to get an access token. Replace YOUR_SUBSCRIPTION_KEY with your resource key for the Speech service. Make sure to use the correct endpoint for the region that matches your subscription. This example is currently set to West US.

cURL sample

cURL is a command-line tool available in Linux (and in the Windows Subsystem for Linux). This cURL command illustrates how to get an access token. Replace YOUR_SUBSCRIPTION_KEY with your resource key for the Speech service. Make sure to use the correct endpoint for the region that matches your subscription. This example is currently set to West US.

This C# class illustrates how to get an access token. Pass your resource key for the Speech service when you instantiate the class. If your subscription isn't in the West US region, change the value of FetchTokenUri to match the region for your subscription.

Python sample

How to use an access token.

The access token should be sent to the service as the Authorization: Bearer <TOKEN> header. Each access token is valid for 10 minutes. You can get a new token at any time, but to minimize network traffic and latency, we recommend using the same token for nine minutes.

Here's a sample HTTP request to the Speech to text REST API for short audio:

Use Microsoft Entra authentication

To use Microsoft Entra authentication with the Speech to text REST API for short audio, you need to create an access token. The steps to obtain the access token consisting of Resource ID and Microsoft Entra access token are the same as when using the Speech SDK. Follow the steps here Use Microsoft Entra authentication

- Create a Speech resource

- Configure the Speech resource for Microsoft Entra authentication

- Get a Microsoft Entra access token

- Get the Speech resource ID

After the resource ID and the Microsoft Entra access token were obtained, the actual access token can be constructed following this format:

You need to include the "aad#" prefix and the "#" (hash) separator between resource ID and the access token.

To learn more about Microsoft Entra access tokens, including token lifetime, visit Access tokens in the Microsoft identity platform .

- Create a free Azure account

- Get started with custom neural voice

- Batch synthesis

Was this page helpful?

Additional resources

- Español – América Latina

- Português – Brasil

- Tiếng Việt

- Chrome for Developers

Voice driven web apps - Introduction to the Web Speech API

The new JavaScript Web Speech API makes it easy to add speech recognition to your web pages. This API allows fine control and flexibility over the speech recognition capabilities in Chrome version 25 and later. Here's an example with the recognized text appearing almost immediately while speaking.

DEMO / SOURCE

Let’s take a look under the hood. First, we check to see if the browser supports the Web Speech API by checking if the webkitSpeechRecognition object exists. If not, we suggest the user upgrades their browser. (Since the API is still experimental, it's currently vendor prefixed.) Lastly, we create the webkitSpeechRecognition object which provides the speech interface, and set some of its attributes and event handlers.

The default value for continuous is false, meaning that when the user stops talking, speech recognition will end. This mode is great for simple text like short input fields. In this demo , we set it to true, so that recognition will continue even if the user pauses while speaking.

The default value for interimResults is false, meaning that the only results returned by the recognizer are final and will not change. The demo sets it to true so we get early, interim results that may change. Watch the demo carefully, the grey text is the text that is interim and does sometimes change, whereas the black text are responses from the recognizer that are marked final and will not change.

To get started, the user clicks on the microphone button, which triggers this code:

We set the spoken language for the speech recognizer "lang" to the BCP-47 value that the user has selected via the selection drop-down list, for example “en-US” for English-United States. If this is not set, it defaults to the lang of the HTML document root element and hierarchy. Chrome speech recognition supports numerous languages (see the “ langs ” table in the demo source), as well as some right-to-left languages that are not included in this demo, such as he-IL and ar-EG.

After setting the language, we call recognition.start() to activate the speech recognizer. Once it begins capturing audio, it calls the onstart event handler, and then for each new set of results, it calls the onresult event handler.

This handler concatenates all the results received so far into two strings: final_transcript and interim_transcript . The resulting strings may include "\n", such as when the user speaks “new paragraph”, so we use the linebreak function to convert these to HTML tags <br> or <p> . Finally it sets these strings as the innerHTML of their corresponding <span> elements: final_span which is styled with black text, and interim_span which is styled with gray text.

interim_transcript is a local variable, and is completely rebuilt each time this event is called because it’s possible that all interim results have changed since the last onresult event. We could do the same for final_transcript simply by starting the for loop at 0. However, because final text never changes, we’ve made the code here a bit more efficient by making final_transcript a global, so that this event can start the for loop at event.resultIndex and only append any new final text.

That’s it! The rest of the code is there just to make everything look pretty. It maintains state, shows the user some informative messages, and swaps the GIF image on the microphone button between the static microphone, the mic-slash image, and mic-animate with the pulsating red dot.

The mic-slash image is shown when recognition.start() is called, and then replaced with mic-animate when onstart fires. Typically this happens so quickly that the slash is not noticeable, but the first time speech recognition is used, Chrome needs to ask the user for permission to use the microphone, in which case onstart only fires when and if the user allows permission. Pages hosted on HTTPS do not need to ask repeatedly for permission, whereas HTTP hosted pages do.

So make your web pages come alive by enabling them to listen to your users!

We’d love to hear your feedback...

- For comments on the W3C Web Speech API specification: email , mailing archive , community group

- For comments on Chrome’s implementation of this spec: email , mailing archive

Refer to the Chrome Privacy Whitepaper to learn how Google is handling voice data from this API.

Except as otherwise noted, the content of this page is licensed under the Creative Commons Attribution 4.0 License , and code samples are licensed under the Apache 2.0 License . For details, see the Google Developers Site Policies . Java is a registered trademark of Oracle and/or its affiliates.

Last updated 2013-01-13 UTC.

- Skip to main content

- Skip to search

- Skip to select language

- Sign up for free

- Remember language

SpeechRecognition

This feature is not Baseline because it does not work in some of the most widely-used browsers.

- See full compatibility

- Report feedback

The SpeechRecognition interface of the Web Speech API is the controller interface for the recognition service; this also handles the SpeechRecognitionEvent sent from the recognition service.

Note: On some browsers, like Chrome, using Speech Recognition on a web page involves a server-based recognition engine. Your audio is sent to a web service for recognition processing, so it won't work offline.

Constructor

Creates a new SpeechRecognition object.

Instance properties

SpeechRecognition also inherits properties from its parent interface, EventTarget .

Returns and sets a collection of SpeechGrammar objects that represent the grammars that will be understood by the current SpeechRecognition .

Returns and sets the language of the current SpeechRecognition . If not specified, this defaults to the HTML lang attribute value, or the user agent's language setting if that isn't set either.

Controls whether continuous results are returned for each recognition, or only a single result. Defaults to single ( false .)

Controls whether interim results should be returned ( true ) or not ( false .) Interim results are results that are not yet final (e.g. the SpeechRecognitionResult.isFinal property is false .)

Sets the maximum number of SpeechRecognitionAlternative s provided per result. The default value is 1.

Instance methods

SpeechRecognition also inherits methods from its parent interface, EventTarget .

Stops the speech recognition service from listening to incoming audio, and doesn't attempt to return a SpeechRecognitionResult .

Starts the speech recognition service listening to incoming audio with intent to recognize grammars associated with the current SpeechRecognition .

Stops the speech recognition service from listening to incoming audio, and attempts to return a SpeechRecognitionResult using the audio captured so far.

Listen to these events using addEventListener() or by assigning an event listener to the oneventname property of this interface.

Fired when the user agent has started to capture audio. Also available via the onaudiostart property.

Fired when the user agent has finished capturing audio. Also available via the onaudioend property.

Fired when the speech recognition service has disconnected. Also available via the onend property.

Fired when a speech recognition error occurs. Also available via the onerror property.

Fired when the speech recognition service returns a final result with no significant recognition. This may involve some degree of recognition, which doesn't meet or exceed the confidence threshold. Also available via the onnomatch property.

Fired when the speech recognition service returns a result — a word or phrase has been positively recognized and this has been communicated back to the app. Also available via the onresult property.

Fired when any sound — recognizable speech or not — has been detected. Also available via the onsoundstart property.

Fired when any sound — recognizable speech or not — has stopped being detected. Also available via the onsoundend property.

Fired when sound that is recognized by the speech recognition service as speech has been detected. Also available via the onspeechstart property.

Fired when speech recognized by the speech recognition service has stopped being detected. Also available via the onspeechend property.

Fired when the speech recognition service has begun listening to incoming audio with intent to recognize grammars associated with the current SpeechRecognition . Also available via the onstart property.

In our simple Speech color changer example, we create a new SpeechRecognition object instance using the SpeechRecognition() constructor, create a new SpeechGrammarList , and set it to be the grammar that will be recognized by the SpeechRecognition instance using the SpeechRecognition.grammars property.

After some other values have been defined, we then set it so that the recognition service starts when a click event occurs (see SpeechRecognition.start() .) When a result has been successfully recognized, the result event fires, we extract the color that was spoken from the event object, and then set the background color of the <html> element to that color.

Specifications

Browser compatibility.

BCD tables only load in the browser with JavaScript enabled. Enable JavaScript to view data.

- Web Speech API

DEV Community

Posted on Jan 2, 2024

Building a Real-time Speech-to-text Web App with Web Speech API

Happy New Year, everyone! In this short tutorial, we will build a simple yet useful real-time speech-to-text web app using the Web Speech API. Feature-wise, it will be straightforward: click a button to start recording, and your speech will be converted to text, displayed in real-time on the screen. We'll also play with voice commands; saying "stop recording" will halt the recording. Sounds fun? Okay, let's get into it. 😊

Web Speech API Overview

The Web Speech API is a browser technology that enables developers to integrate speech recognition and synthesis capabilities into web applications. It opens up possibilities for creating hands-free and voice-controlled features, enhancing accessibility and user experience.

Some use cases for the Web Speech API include voice commands, voice-driven interfaces, transcription services, and more.

Let's Get Started

Now, let's dive into building our real-time speech-to-text web app. I'm going to use vite.js to initiate the project, but feel free to use any build tool of your choice or none at all for this mini demo project.

- Create a new vite project:

- Choose "Vanilla" on the next screen and "JavaScript" on the following one. Use arrow keys on your keyboard to navigate up and down.

HTML Structure

CSS Styling

JavaScript Implementation

This simple web app utilizes the Web Speech API to convert spoken words into text in real-time. Users can start and stop recording with the provided buttons. Customize the design and functionalities further based on your project requirements.

Final demo: https://stt.nixx.dev

Feel free to explore the complete code on the GitHub repository .

Now, you have a basic understanding of how to create a real-time speech-to-text web app using the Web Speech API. Experiment with additional features and enhancements to make it even more versatile and user-friendly. 😊 🙏

Top comments (0)

Templates let you quickly answer FAQs or store snippets for re-use.

Are you sure you want to hide this comment? It will become hidden in your post, but will still be visible via the comment's permalink .

Hide child comments as well

For further actions, you may consider blocking this person and/or reporting abuse

How to fix TailwindCSS not working with Vite + React

Wahid - Dec 27 '24

Variables, the Unsung Heroes of Programming

Valt aoi - Dec 28 '24

Next.js and SSR: Building high-performance server-rendered applications

Tianya School - Dec 27 '24

AI Test Case Generators: Revolutionizing Software Testing

keploy - Dec 27 '24

We're a place where coders share, stay up-to-date and grow their careers.

- Manage Email Subscriptions

- How to Post to DZone

- Article Submission Guidelines

- Manage My Drafts

For Java apps, containerization helps solve the majority of challenges related to portability and consistency. See how.

Far too many vulnerabilities have been introduced into software products. Don't treat your supply chain security as an afterthought.

The dawn of observability across the SDLC has fully disrupted standard performance monitoring and management practices. See why.

AI Automation Essentials: Harness advanced AI techniques, ML algorithms, NLP, and computer vision to analyze extensive datasets.

- How to Leverage Speech-to-Text With Node.js

- Tutorial: How to Build a Progressive Web App (PWA) with Face Recognition and Speech Recognition

- Scholcast: Generating Academic Paper Summaries With AI-Driven Audio

- API and Security: From IT to Cyber

- Kubernetes Ephemeral Containers: Enhancing Security and Streamlining Troubleshooting in Production Clusters

- Why Successful Companies Don't Have DBAs

- Differences Between Software Design and Software Architecture

- Boosting Performance and Efficiency: Enhancing React Applications With GraphQL Over REST APIs

- Data Engineering

The Developer’s Guide to Web Speech API: What It Is, How It Works, and More

Speech recognition and speech synthesis have had a dramatic impact on areas like accessibility and more. in this post, take a deep dive into web speech api..

Join the DZone community and get the full member experience.

Web Speech API is a web technology that allows you to incorporate voice data into apps. It converts speech into text and vice versa using your web browser.

The Web Speech API was introduced in 2012 by the W3C community. A decade later, this API is still under development and has limited browser compatibility.

This API supports short pieces of input, e.g., a single spoken command, as well as lengthy, continuous input. The capability for extensive dictation makes it ideal for integration with the Applause app, while brief inputs work well for language translation.

Speech recognition has had a dramatic impact on accessibility. Users with disabilities can navigate the web more easily using their voices. Thus, this API could become key to making the web a friendlier and more efficient place.

The text-to-speech and speech-to-text functionalities are handled by two interfaces: speech synthesis and speech recognition.

Speech Recognition

In the speech recognition ( SpeechRecognition ) interface, you speak into a microphone and then the speech recognition service then checks your words against its own grammar.

The API protects the privacy of its users by first asking permission to access your voice by a microphone. If the page using the API uses the HTTPS protocol , it asks for permission only once. Otherwise, the API will ask in every instance.

Your device probably already includes a speech recognition system, e.g., Siri for iOS or Android Speech. When using the speech recognition interface, the default system will be used. After the speech is recognized, it is converted and returned as a text string.

In "one-shot" speech recognition, the recognition ends as soon as the user stops speaking. This is useful for brief commands, like a web search for app testing sites , or making a call. In "continuous" recognition, the user must end the recognition manually by using a "stop" button.

At the moment, speech recognition for Web Speech API is only officially supported by two browsers: Chrome for Desktop and Android. Chrome requires the use of prefixed interfaces.

However, the Web Speech API is still experimental, and specifications could change. You can check whether your current browser supports the API by searching for the webkitSpeechRecognition object.

Speech Recognition Properties

Let’s learn a new function: speechRecognition() .

Now let’s examine the callbacks for certain events:

- onStart : onStart is triggered when the speech recognizer begins to listen to and recognize your speech. A message may be displayed to notify the user that the device is now listening.

- onEnd : onEnd generates an event that is triggered each time the user ends the speech recognition.

- onError : Whenever a speech recognition error occurs, this event is triggered using the SpeechRecognitionError interface.

- onResult : When the speech recognition object has obtained results, this event is triggered. It returns both the interim results and the final results. onResult must use the SpeechRecognitionEvent interface.

The SpeechRecognitionEvent object contains the following data:

- results[i] : An array of the result objects of the speech recognition, each element representing a recognized word

- resultindex : The current recognition index

- results[i][j] : The j-th alternative to a recognized word; the first word to appear is the word deemed most probable

- results[i].isFinal : A Boolean value that displays whether the result is interim or final

- results[i][j].transcript : The word’s text representation

- results[i][j].confidence : The probability of the result being correct (value range from 0 to 1)

What properties should we configure on the speech recognition object? Let’s take a look.

Continuous vs One-Shot

Decide whether you need the speech recognition object to listen to you continuously until you turn it off, or whether you only need it to recognize a short phrase. Its default setting is "false."

Let’s say you are using the technology for note-taking, to integrate with an inventory tracking template. You need to be able to speak at length with enough time to pause without sending the app back to sleep. You can set continuous to true like so:

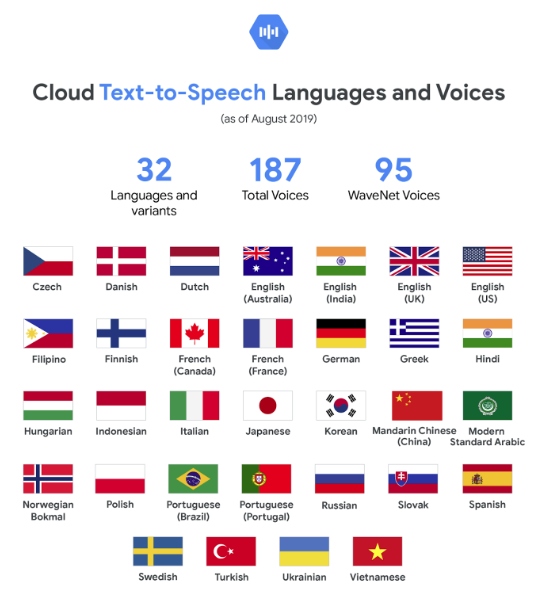

Image Sourced from Google

What language do you want the object to recognize? If your browser is set to English by default, it will automatically select English. However, you can also use locale codes.

Additionally, you could allow the user to select the language from a menu:

Interim Results

Interim results refer to results that are not yet complete or final. You can enable the object to display interim results as feedback to users by setting this property to true :

Start and Stop

If you have configured the speech recognition object to “continuous,” then you will need to set the onClick property of the start and stop buttons, like so:

This will allow you to control when your browser begins "listening" to you, and when it stops.

So, we’ve taken an in-depth look at the speech recognition interface, its methods, and its properties. Now let’s explore the other side of Web Speech API.

Speech Synthesis

Speech synthesis is also known as text-to-speech or TTS. Speech synthesis means taking text from an app and converting it into speech, then playing it from your device’s speaker.

You can use speech synthesis for anything from driving directions to reading out lecture notes for online courses, to screen-reading for users with visual impairments.

In terms of browser support, speech synthesis for Web Speech API can be used in Firefox desktop and mobile from Gecko version 42+. However, you have to enable permissions first. Firefox OS 2.5+ supports speech synthesis by default; no permissions are required. Chrome and Android 33+ also support speech synthesis.

So, how do you get your browser to speak? The main controller interface for speech synthesis is SpeechSynthesis , but a number of related interfaces are required, e.g., for voices to be used for the output. Most operating systems will have a default speech synthesis system.

Put simply, you need to first create an instance of the SpeechSynthesisUtterance interface. The SpeechSynthesisUtterance interface contains the text the service will read, as well as information such as language, volume, pitch, and rate. After specifying these, put the instance into a queue that tells your browser what to speak and when.

Assign the text you need to be spoken to its "text" attribute, like so:

The language will default to your app or browser’s language unless specified otherwise using the .lang attribute.

After your website has loaded, the voiceschanged event can be fired. To change your browser’s voice from its default, you can use the getvoices() method within SpeechSynthesisUtterance . This will show you all of the voices available.

The variety of voices will depend on your operating system. Google has its own set of default voices, as does Mac OS X. Finally, choose your preferred voice using the Array.find() method.

Customize your SpeechSynthesisUtterance as you wish. You can start, stop and pause the queue, or change the talking speed (“rate”).

Web Speech API: The Pros and Cons

When should you use Web Speech API? It’s great fun to play with, but the technology is still in development. Still, there are plenty of potential use cases. Integrating APIs can help modernize IT infrastructure. Let’s look at what Web Speech API is able to do well, and which areas are ripe for improvement.

Boosts Productivity

Talking into a microphone is quicker and more efficient than typing a message. In today’s fast-paced working life, we may need to be able to access web pages while on the go.

It’s also fantastic for reducing the admin workload. Improvements in speech-to-text technology have the potential to significantly cut time spent on data entry tasks. STT could be integrated into audio-video conferencing to speed up note-taking in your stand-up meeting .

Accessibility

As previously mentioned, both STT and TTS can be wonderful tools for users with disabilities or support needs. Additionally, users who may struggle with writing or spelling for any reason may be better able to express themselves through speech recognition.

In this way, speech recognition technology could be a great equalizer on the internet. Encouraging the use of these tools in an office also promotes workplace accessibility.

Translation

Web Speech API could be a powerful tool for language translation due to its capability for both STT and TSS. At the moment, not every language is available; this is one area in which Web Speech API has yet to reach its full potential.

Offline Capability

One drawback is that an internet connection is necessary for the API to function. At the moment, the browser sends the input to its servers, which then returns the result. This limits the circumstances in which Web Speech can be used.

Incredible strides have been made to refine the accuracy of speech recognizers. You may occasionally still encounter some struggles, such as with technical jargon and other specialized vocabularies, or with regional accents. However, in 2022, speech recognition software is now reaching human-level accuracy.

In Conclusion

Although Web Speech API is experimental, it could be an amazing addition to your website or app. From top PropTech companies to marketing, all workplaces can use this API to supercharge efficiency. With a few simple lines of JavaScript, you can open up a whole new world of accessibility.

Speech recognition makes it easier and more efficient for your users to navigate the web. Get excited to watch this technology grow and evolve!

Opinions expressed by DZone contributors are their own.

Partner Resources

The likes didn't load as expected. Please refresh the page and try again.

- About DZone

- Support and feedback

- Community research

- Advertise with DZone

CONTRIBUTE ON DZONE

- Become a Contributor

- Core Program

- Visit the Writers' Zone

- Terms of Service

- Privacy Policy

- 3343 Perimeter Hill Drive

- Nashville, TN 37211

- [email protected]

Let's be friends:

- About AssemblyAI

Speech recognition in the browser using Web Speech API

Learn how to set up speech recognition in your browser using the Web Speech API and JavaScript.

Senior Developer Advocate

Speech recognition has become an increasingly popular feature in modern web applications. With the Web Speech API , developers can easily incorporate speech-to-text functionality into their web projects. This API provides the tools needed to perform real-time transcription directly in the browser, allowing users to control your app with voice commands or simply dictate text.

In this blog post, you’ll learn how to set up speech recognition using the Web Speech API. We’ll create a simple web page that lets users record their speech and convert it into text using the Web Speech API. Here is a screenshot of the final app:

Before we set up the app, let’s learn about the Web Speech API and how it works.

What is the Web Speech API?

The Web Speech API is a web technology that allows developers to add voice capabilities to their applications. It supports two key functions: speech recognition (turning spoken words into text) and speech synthesis (turning text into spoken words). This enables users to interact with websites using their voice, enhancing accessibility and user experience.

The Web Speech API consists of two parts:

- SpeechRecognition : Provides functionality to capture audio input through the user’s microphone, converts it into digital signals, and sends this data to a cloud-based speech recognition engine, such as Google ’ s Speech Recognition . The engine processes the speech and returns the transcribed text back to the browser. This happens in real-time, allowing for dynamic, continuous transcription or voice command execution as the user speaks. Here’s a minimal code example of the SpeechRecognition interface:

- SpeechSynthesis : This part of the API takes text provided by the application and converts it into spoken words using the browser’s built-in voices. The exact voice and language used depend on the user’s device and operating system, but the browser handles the synthesis locally without needing an internet connection.

The Web Speech API abstracts these complex processes, so developers can easily integrate voice features without needing specialized infrastructure or machine learning expertise.

Looking for an alternative?

If you need higher accuracy and more features, you can use the AssemblyAI JavaScript SDK for real-time transcription:

Prerequisites

Let’s walk through each step of setting up the Web Speech API on a website, and by the end, you’ll have a fully functional speech recognition web app.

To follow along with this guide, you need:

- A basic understanding of HTML, JavaScript, and CSS.

- A modern browser (like Chrome) that supports the Web Speech API .

The full code is also available on GitHub here .

Step 1: Set up the Project Structure

First, create a folder for your project, and inside it, add three files:

- index.html : To define the structure of your web page.

- speech-api.js : To handle speech recognition using JavaScript.

- style.css : To style the web page.

Step 2: Write the HTML File

We’ll start by writing the HTML code that will display the speech recognition UI. The page should contain a button for starting and stopping the recording, and a section for displaying the transcription results.

Add the following code to index.html :

This HTML sets up a simple layout with a button that will trigger speech recognition and a div to display the transcription results. If the Web Speech API isn’t supported by the browser, an error message will appear. The error message is hidden initially but can be made visible through JavasScript.

At the bottom of the body , we’ll include a script that points to the speech-api.js file with the Web Speech API logic.

Step 3: Implement Speech Recognition API logic

Now, we’ll move on to writing the JavaScript code to handle speech recognition. Create the speech-api.js file and add the following code:

Explanation of the JavaScript Code

- Checking browser support: We first check whether the SpeechRecognition API is supported by the browser. If not, we hide the recording button and display an error message.

- We initialize the SpeechRecognition object and set continuous to true so that the API continuously listens to the user’s speech until it’s manually stopped.

- interimResults is also set to true so that users can see the live transcription in real-time, instead of only showing text when the end of a sentence is detected.

- Handling the speech event: The onResult function is triggered whenever speech recognition detects spoken words. It iterates over the recognized results and updates the transcriptionResult div with the spoken text. Final results (when the speech has completed) are styled differently using the .final class.

- Handling the button click: The onClick function toggles the recording state. If speech recognition is active, it will stop the recognition; otherwise, it will start listening for speech.

Step 4: Style the Web Page

Next, let’s add some styles to make the page a bit more visually appealing. Create the style.css file and add the following styles:

This CSS file ensures the button is easily clickable and the transcription result is clearly visible. The .final class makes the final transcription results appear in bold black. Every time the end of a sentence is detected, you’ll notice the interim gray text changes to black text.

Step 5: Test the Web App

Once everything is in place, open the index.html file in a browser that supports the Web Speech API (such as Google Chrome). You should see a button labeled "Start recording". When you click it, the browser will prompt you to grant permission to use the microphone.

After you allow the browser access, the app will start transcribing any spoken words into text and display them on the screen. The transcription results will continue to appear until you click the button again to stop recording.

You’ve learned what the Web Speech API is and how you can use it. With just a few lines of code, you can easily add speech recognition to your web projects using the Web Speech API. Check out the official documentation to learn more.

If you’re looking for an alternative with more features and higher transcription accuracy, we also recommend trying out the AssemblyAI JavaScript SDK .

Care about transcription accuracy?

Use the AssemblyAI JavaScript SDK for real-time transcription:

To learn more about how you can analyze audio files with AI and get inspired to build more Speech AI features into your app, check out more of our blog, like this article on Adding Punctuation, Casing, and Formatting to your transcriptions , or this guide on Summarizing audio with LLMs in Node.js .

Popular posts

Top 6 benefits of integrating LLMs for Conversation Intelligence platforms

Announcements

Dev.to x AssemblyAI: Winter Speech-to-Text Challenge Winners

Top AI models for conversation intelligence

What is voice intelligence and how does it work?

Featured writer

IMAGES

COMMENTS

Web Speech API Demonstration Click on the microphone icon and begin speaking for as long as you like. . Copy and Paste. Press Control-C to copy text. (Command-C on Mac.) ...

Feb 19, 2023 · The Web Speech API enables you to incorporate voice data into web apps. The Web Speech API has two parts: SpeechSynthesis (Text-to-Speech), and SpeechRecognition (Asynchronous Speech Recognition.)

The Speech service allows you to convert text into synthesized speech and get a list of supported voices for a region by using a REST API. In this article, you learn about authorization options, query options, how to structure a request, and how to interpret a response.

Nov 25, 2024 · The Web Speech API provides two distinct areas of functionality — speech recognition, and speech synthesis (also known as text to speech, or tts) — which open up interesting new possibilities for accessibility, and control mechanisms.

Jan 13, 2013 · The new JavaScript Web Speech API makes it easy to add speech recognition to your web pages. This API allows fine control and flexibility over the speech recognition capabilities in Chrome version 25 and later.

Jul 26, 2024 · The SpeechRecognition interface of the Web Speech API is the controller interface for the recognition service; this also handles the SpeechRecognitionEvent sent from the recognition service.

Jun 9, 2021 · In this tutorial, you used the Web Speech API to build a text-to-speech app for the web. You can learn more about the Web Speech API and also find some helpful resources at the MDN Web Docs.

Jan 2, 2024 · In this short tutorial, we will build a simple yet useful real-time speech-to-text web app using the Web Speech API. Feature-wise, it will be straightforward: click a button to start recording, and your speech will be converted to text, displayed in real-time on the screen.

Nov 7, 2022 · Web Speech API is a web technology that allows you to incorporate voice data into apps. It converts speech into text and vice versa using your web browser.

Oct 19, 2024 · With the Web Speech API, developers can easily incorporate speech-to-text functionality into their web projects. This API provides the tools needed to perform real-time transcription directly in the browser, allowing users to control your app with voice commands or simply dictate text.